This post has been written as part of my work at Accenture and can be found on LinkedIn as well. This blog post represents my personal views and subjective experience with these tools. I am sure that since writing this back in August 2024 these tools have evolved a lot and made great strides in precise and accurate image generation, so some of their capabilities might NOT reflect the most current status.

In recent years, the marketing and design landscape has been dramatically transformed by the advent of generative AI tools. These sophisticated algorithms promise to revolutionize how we create visual content for marketing and design, offering unprecedented speed and seemingly limitless creative possibilities. In this article I will explore a few of the most famous tools on the market (Midjourney, Adobe Firefly, Stable Diffusion) and report on my experience.

The Current Landscape

Generative AI is an exciting technology that harnesses the power of artificial intelligence to create new, original content. At its core, generative AI in design relies on large language models and diffusion models trained on vast amounts of data. These models learn to understand and generate complex patterns, enabling them to translate ordinary text prompts into extraordinary visual creations. This technology powers popular tools like Midjourney, Adobe Firefly, DALL-E and Stable Diffusion, each offering its own flavor of AI-generated artistry.

From 3D text made of fluffy clouds to science fiction concept art, generative AI opens a world of possibilities for anyone who loves to dream and experiment with art, product and character design, unique text treatments, logos, and more. Imagine being able to rapidly prototype a dozen different logo concepts or generate a series of product mock-ups in various styles, all in a matter of minutes. Generative AI tools are making this a reality, allowing designers and marketers to explore creative directions more quickly and efficiently than ever before. These are some of the key use cases of generative AI in marketing and design that I have identified so far:

- Rapid Prototyping: Quickly visualize product concepts, speeding up the ideation phase.

- Personalized Marketing: Create tailored visuals for different customer segments at scale.

- Social Media Content: Generate engaging visuals for posts, stories, and ads consistently.

- Brand Identity Exploration: Produce diverse logo concepts and visual styles for branding projects.

- Advertising: Rapidly produce and test multiple ad creatives for agile campaign optimization.

- Editorial Illustrations: Quickly create visuals for articles, blog posts, and digital publications.

- Event Marketing: Develop cohesive visual identities for event promotion and on-site materials.

- Product Visualization: Generate images of products in various configurations for e-commerce.

Tools like Midjourney, DALL-E, Adobe Firefly, and Stable Diffusion have captured the imagination of designers and marketers alike, each offering unique strengths:

- Midjourney excels in creating imaginative and artistic imagery, often producing stunning conceptual visuals, and its strong understanding of complex prompts.

- DALL-E, developed by OpenAI, is known for its ability to generate diverse, highly detailed images in a particular style.

- Adobe Firefly integrates seamlessly with the Adobe Creative Suite, specializing in commercial-ready imagery with more precise control.

- Stable Diffusion, being open-source, offers extensive customization options for developers and tech-savvy users.

But as with any technological revolution, the reality is more nuanced than the hype suggests. While these AI tools have indeed opened new avenues for creativity and efficiency, they also come with limitations that are often overlooked in the excitement surrounding their capabilities.

In this article, we'll explore the strengths and weaknesses of these popular generative AI tools, examine their limitations in meeting specific client needs, and discuss why human expertise remains crucial in the world of design and marketing. As we navigate this new landscape, it's essential to understand both the potential and the pitfalls of generative AI, allowing us to harness these tools effectively while recognizing that this technology is as of now still most effective when combined with human expertise to ensure alignment with brand guidelines and business objectives.

Midjourney - A Powerhouse for Boundless Creative Imagery

Prompt: “a modern advanced futuristic city in shades of purple with European landmarks such as Eiffel Tower, the Alps mountains, Colosseum, Brandenburg Gate , the city is floating on several green teal coloured clouds up scattered in the sky --s 250 --style raw --ar 3:2”

Strengths of Midjourney:

- Midjourney has emerged as a powerful tool in the generative AI landscape, particularly excelling in creating high-resolution, visually stunning imagery.

- Generating creative, artistic, and often surreal visuals that can be invaluable for brand design and conceptual work.

- Wuality of Midjourney's output is particularly notable, with images often featuring rich details, vibrant colors, and artistic compositions that can rival professional photography or digital art.

- Available on the Discord platform, and it is only available to try out with a paid subscription.

- Ability to produce high-quality images of humans, albeit with some limitations in consistency and specific details.

- Shines in creating atmospheric scenes and stylized portraits, making it useful for mood boards, character concepts, and illustrative work.

- Settting the aspect ratio, useful for social media posts on Instagram and other platforms

Midjourney offers advanced features like "--sref" (style reference) and "--cref" (character reference), which allow users to influence the output based on specific images. These options can be particularly useful for maintaining brand consistency or designing a virtual character persona that is consistent across various images and settings. Another practical advantage of using Midjourney is the option to set the aspect ratio of a photo with the "--ar" parameter, which allows creating landscape, square, or other marketing materials in various sizes that already fit the format requirements of some social media sites, like Instagram for example.

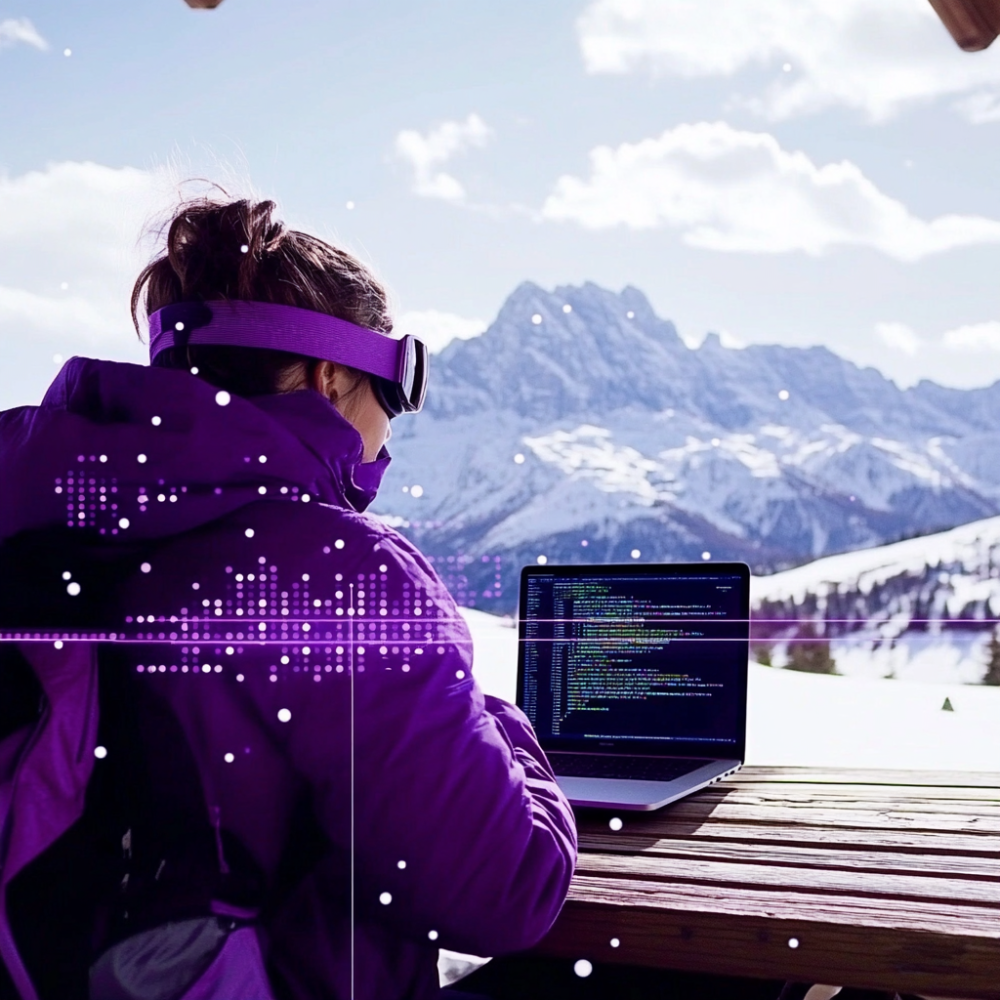

An example of a photo created with Midjourney using a style reference. Prompt: “a photo of an office worker with ski googles seating outside at a wooden table at an alpine traditional restaurant, while coding at a laptop. alpine mountain peaks covered in snow in the background. The sky has some white clouds with purple outlines showcasing the upcoming sunset --sref

Limitations of Midjourney:

However, Midjourney is not without its limitations. One of its most significant drawbacks is its struggle with text generation within images. The tool often produces gibberish or inaccurate text when asked to include specific words or phrases in the image. Additionally, while Midjourney's prompt understanding has improved over time, it can still misinterpret complex or nuanced requests.

Another limitation is the lack of precise control over image composition. Users may find it challenging to specify exact placements of objects or to consistently generate a specific number of elements in a scene. This can be frustrating when trying to create images that require precise layouts or specific object arrangements. While Midjourney excels in creating visually appealing and creative images, it may not be the best choice for projects requiring exact specifications or precise text inclusion. Its strengths lie in generating inspirational, artistic content rather than in producing technically accurate or highly controlled visual outputs. I am convinced that investing effort in tweaking the prompts, weights, style reference, negative prompting, and playing with vary region you can eventually get the tailored image results you were looking to create with the first prompt. The question arises then, is worth the time and effort? I will let you be the judge of that.

Adobe Firefly - Pioneering Ethical AI in Creative Design

Adobe Firefly stands out in the generative AI landscape with its unique approach to ethics and integration within the Adobe ecosystem. This tool is not just another image generator; it's a family of creative generative AI models designed specifically for Adobe products, with a strong focus on ethical AI practices and copyright considerations. Adobe Firefly can be tried out by anyone for free with the basic account which offers 25 generative credits per month.

Ethical AI and Copyright Compliance:

One of Firefly's most significant advantages is its commitment to ethical AI use. Adobe has trained Firefly on a carefully curated dataset that includes Adobe Stock imagery (used in accordance with the Stock Contributor License agreement), openly licensed content and public domain content with expired copyrights. This approach aims to create a tool that is commercially safe for both individuals and enterprise creative teams. By avoiding the use of copyrighted material in its training data, Adobe seeks to minimize the risk of copyright infringement in Firefly-generated content. This is a crucial consideration for businesses and professionals who need to ensure their creative outputs are legally compliant.

Strengths of Adobe Firefly:

- Easily transfer AI-generated content to apps like Photoshop and Illustrator for further refinement.

- Incorporate AI-generated elements into existing projects since Adobe offers integration with Adobe's suite of creative tools offers a familiar experience for those already in the Adobe ecosystem.

- Text effect creation (e.g., adding decorative images to text) is strong and precise and it is my favorite part of this tool.

- Generative removal and fill, such as removing unwanted elements from photos, removing background, and adding elements to photos also with generative AI that is fairly superior to the existing Midjourney capabilities.

- Adobe Firefly is the preferred choice for commercial projects due to its focus on copyright compliance and ethical AI practices.

- Setting aspect ratios, adding reference photos, editing photos for social media platforms.

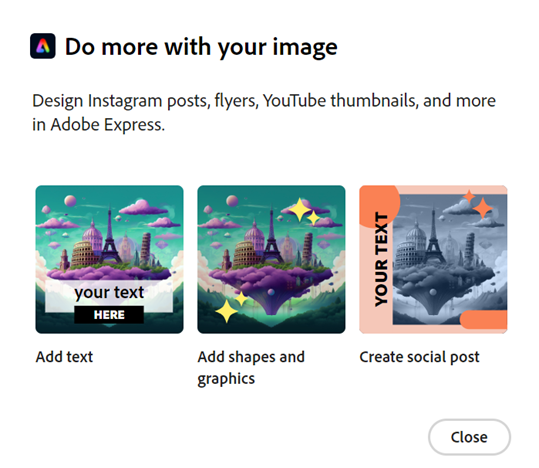

This workflow integration sets Firefly apart from other platforms like Midjourney or DALL-E, offering a more comprehensive end-to-end solution for creative professionals already working within the Adobe ecosystem. More recently, Adobe Firefly also offers the option of using a reference picture to use as composition or style guidance when generating a new photo. The ability edits your photo through text, shapes, graphics offers much more control in comparison to Midjourney in my opinion and it is one of the main reasons that I use Adobe Firefly. However, the photo quality is not my favorite, it looks like an unfinished product to me. But I can see how the image style might be a matter of preference for the user.

Adobe Firefly gives the user much more control over editing their generated photos, especially when adding text to it or creating materials with text for social media websites.

Limitations of Adobe Firefly:

- Image Quality: While capable of producing photo-realistic images, Firefly's outputs are generally considered less aesthetically exciting and detailed compared to Midjourney's.

- Dependability & prompt understanding: Currently, Firefly is considered less dependable than Midjourney in terms of consistent output quality and understanding the nuances of a prompt.

- Artistic Depth: Firefly's generated images may lack the depth and realism seen in Midjourney's outputs, particularly in complex scenarios and futuristic themes.

- Text-to-Image Capability: Midjourney is currently considered better at creating images based on text prompts and their photos are more liked by the audiences

In my opinion, while Adobe Firefly has significant strengths, particularly in terms of commercial usage and integration with Adobe's ecosystem, it currently lags behind Midjourney in some aspects of image quality and artistic creativity. However, Firefly's potential for seamless workflow integration with the Adobe suite makes it a promising tool for users already familiar with Adobe. Last, but not least, Adobe text and image editing options like generative erase and fill are already significantly easier to use and apply especially when it comes to create social media & marketing posts, flyers, presentations, or videos for a specific brand. Ultimately, the choice between Midjourney and Adobe may depend on the user's specific needs: commercial safety and workflow editing integration (Adobe Firefly) versus artistic quality and realistic photography (Midjourney).

Stable Diffusion Models - Open-Source Power with Customization Potential

Stable Diffusion has emerged as a prominent player in the generative AI landscape, distinguished by its open-source nature and the flexibility it offers to developers and advanced users. This tool has gained significant traction in the AI community for its capability to generate high-quality images from text prompts, much like its counterparts Midjourney and Adobe Firefly. Currently, there are several versions of stable diffusion offered by different teams, and these are the most famous ones: Stable Diffusion from StabilityAI, HyperSD from ByteDance, and the latest competitor in the game Flux from Black Forest Labs. The main difference between these tools and the previous two, is that they are open-source and can run locally on a consumer laptop without the need for crazy GPU power (depending on the amount and resolution of generated images of course).

Strengths of Stable Diffusion models:

- Open-Source Flexibility: As an open-source project, Stable Diffusion allows for extensive customization and fine-tuning. Developers can modify the model, train it on specific datasets, and integrate it into various applications.

- Privacy through local Deployment: Unlike cloud-based services, Stable Diffusion can be run locally on a user's hardware, offering greater privacy and control over the generation process.

- Customizable Models: Users can easily fine-tune the model on specific styles or subjects, allowing for more targeted and consistent outputs in niche areas like defect generation for specific manufacturing industries. Such objects are usually not part of the traditional training material of models like Midjourney or Adobe, which focus more on generating beautiful people than accurately representing the inner parts of a car engine or surgical instrument.

- Integration Potential: Its open nature allows for seamless integration into existing workflows and applications, making it versatile for various use cases.

- Cost-Effective: For those with the technical know-how, Stable Diffusion can be a more cost-effective solution compared to subscription-based services.

- Community-Driven Development: A large and active community contributes to Stable Diffusion's rapid improvement and the development of various forks and implementations.

Limitations of Stable Diffusion models:

Stable Diffusion represents a powerful and flexible option in the world of AI image generation. Its open-source nature offers unparalleled customization potential, making it a favourite among developers and tech-savvy users. However, this comes at the cost of a steeper learning curve and potentially more complex implementation compared to more user-friendly alternatives. For those willing to invest the time to upskill in these tools and in local computing resources, Stable Diffusion can be a highly versatile tool in both the artist's and business consultant’s arsenal.

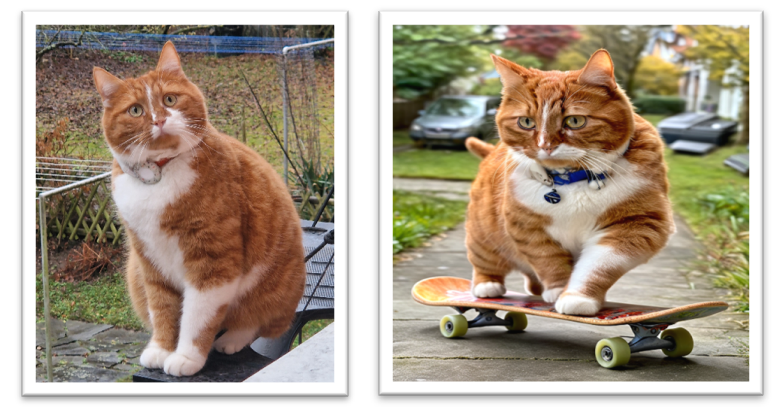

Left photo: My cat Thor on the ladder. Right photo: A generated photo of Thor riding the skateboard (I wish!) using a fine-tuned stable diffusion model with DreamBooth.

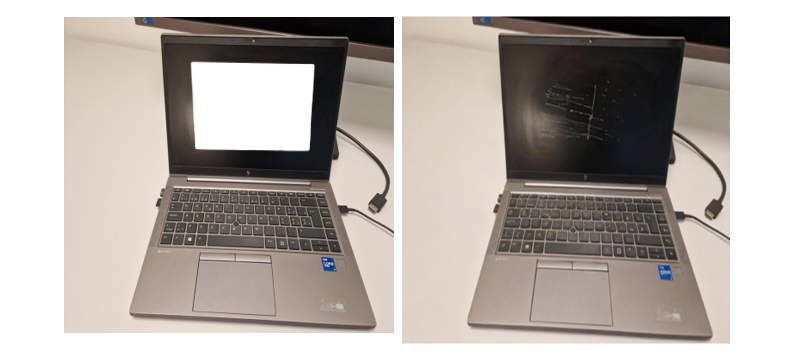

Stable diffusion allows precise editing and creating of custom textures using techniques such as in-painting and out painting (generative fill). Here the photo on the left shows my laptop where I marked with the white area where I want the model to add “realistic looking scratches”.

Stable Diffusion stands at the forefront of AI image generation, offering unparalleled flexibility and power. As an open-source platform, it provides extensive customization opportunities, making it a favorite among developers and tech enthusiasts. While its learning curve may be steeper than some user-friendly alternatives, the potential rewards are immense for those willing to invest time in mastering these tools.

For artists and business consultants alike, Stable Diffusion can be an incredibly versatile asset, especially considering the rapid advancements within the open-source community. By allocating resources to local computing power and upskilling, users can harness a tool that's not just powerful today, but continually evolving.

The field of open-source AI image generation is brimming with exciting developments. In an upcoming post, I'll delve deeper into the world of open-source Stable Diffusion models, exploring how you can leverage these cutting-edge tools to enhance your creative and professional endeavors. Stay tuned for insights that could revolutionize your approach to AI-powered imagery!

Resources:

https://docs.midjourney.com/docs/quick-start

https://www.adobe.com/products/firefly/discover/firefly-vs-midjourney.html#

https://stability.ai/stable-image

https://huggingface.co/blog/stable_diffusion

https://github.com/AUTOMATIC1111/stable-diffusion-webui/blob/master/screenshot.png